CPU Pinning in Telco Cloud

Whole world telecom infrastructure (RAN, Core and IMS) adapting towards the cloud and virtualization where the network nodes are sharing virtual resources (Compute, storage, networking etc.) for running application on VMs.

Service Providers are concerned that these VM should be placed on physical infrastructure, which has enough capabilities to offer Telco grade performance & resiliency.

Cloud should support various workloads with different resource CPU, Memory, Switch, & performance in terms of latency and throughput requirement. When an operator instantiates a VM, Openstack Nova scheduler finds correct physical hosting infrastructure that can meet all requirements. To achieve precise VM placement, Openstack Nova use detailed filter framework. Based on filtering & weighing (prioritization of filters), Nova scheduler selects physical host which has required capabilities that meets telecommunication application needs.

Voice/Video processing VMs are latency prone. Such latency prone VMs should be hosted on physical server with CPU pinning. To avoid latency and high CPU processing requirements, each virtual CPU shall be fixed with physical CPU, e.g oversubscription ratio as 1:1 which can be achieved with CPU pinning.

CPU Pinning Concept

CPU Pinning is one of the techniques used for improving the application VM process efficiency. Mostly all hypervisor environments RHOS, Openstack etc support the vCPU pinning.

When vCPU pinning is enabled for an application (VNF), the vCPU’s of a particular VM is confined to work on specific number of pCores on physical host. Most of the deployment environment use dedicated vCPU pinning.

When vCPU pinning is not used, the vCPUs of a particular VM are allowed to be executed on any pCores of the physical host. Each thread can be shared among 2, 4 or more vCPUs e.g oversubscription ratio as 2:1 or 4:1.

Why CPU Pinning is required

- Exclusively assignment of CPUs to Virtual Machine, no CPU sharing

- Maximize CPU cache efficiency

- Ease for grouping and separating VMs within NUMA

- Memory Access Speed

CPU Pinning Example

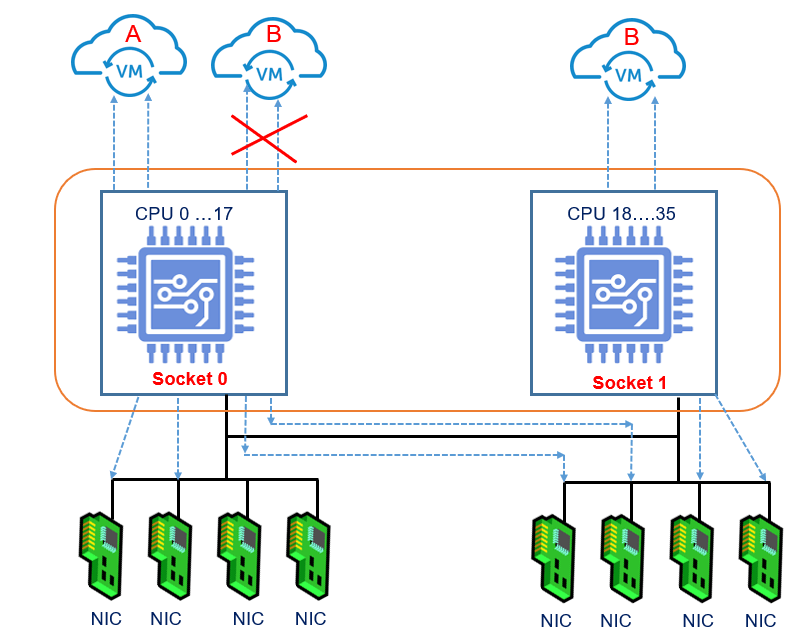

In the below image, VM-A is assigned vCPU on socket 0 of physical server. The VM-B cannot use vCPU resource on socket 0, because of dedicated pinning.

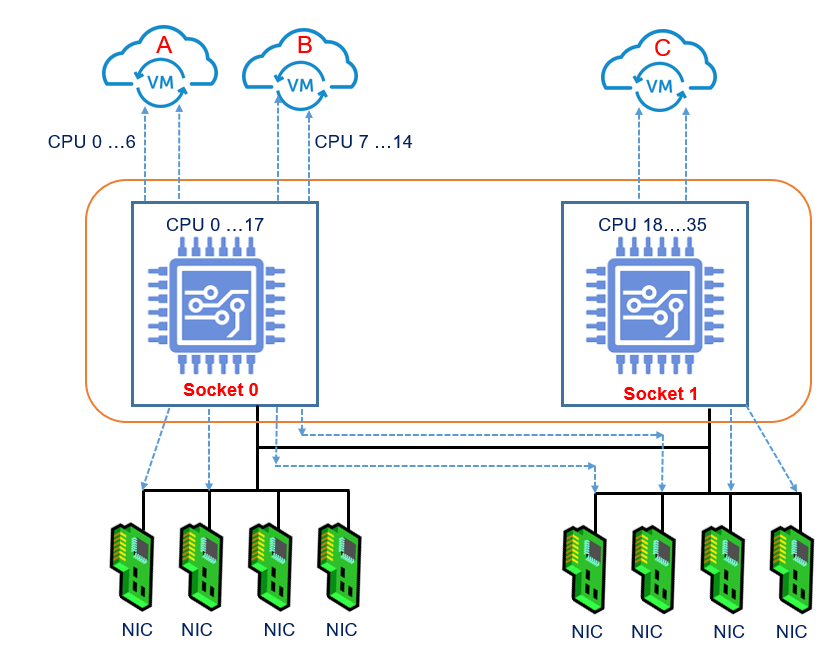

Each socket has to reserve some vCPU for hypervisor and switch as per NFVI environment, that is not shown here. Also, when the VM sizes are not too big, pinning for multiple VMs per socket is possible to apply as shown figure below.

Article Submitted By: Vijay Sharma

Vijay is a IMS, Virtualization testing professional.He have many years of experience in working with VoLTE,LTE/4G, IMS node with major OEMs and ODMs in various roles

Related Post