Fog Computing and Internet of Things

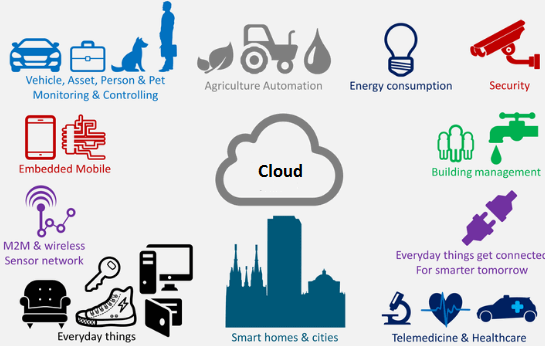

The internet of things is expanding and creating opportunities in many different areas and will shape new business models that meet customers’ needs.Opportunities, productivity and growth all rely on data.

Sensor devices in Internet of Things (IoT) networks are constantly generating an unprecedented volume and variety of data. After collection, data is sent to the cloud for statistical analysis, forecasting and data analytics for businesses goals. A cloud is a network of servers in another location providing centralized resources such as computation and storage and the problem with the cloud is simply distance. Cloud servers have the power to process and mine large data sets but are too far away to process data and respond in real time. Because of the distance, the cloud model can be a problem in environments where operations are mission-critical or internet connectivity is less than ideal.

An optimal solution is to distribute the computing requirements and bring processing closer to the edge of the network,reducing the amount of data that is sent to the cloud for processing and analysis. There is a huge value in having access to real-time information and analytics from device-generated data, which helps businesses to make critical split-second decisions.Bringing computing resources and application services closer to the edge is known as Fog Computing.

Common Types of Cloud Computing

Even though both Cloud Computing and Fog computing provides the storage, processing and data to end-users,Fog computing has a bigger proximity to end-users and bigger geographical distribution. There are different type of cloud computing and some are defined below

- Cloud Computing –It is a network model using a network of remote servers hosted on the Internet to store, manage, and process data, rather than a local server or a personal computer, Cloud Computing can be a heavyweight and dense form of computing power.

- Fog computing – Fog computing is a term created by Cisco that refers to extending cloud computing to the edge of an enterprise’s network. Also known as Edge Computing or fogging, fog computing facilitates the operation of compute, storage, and networking services between end devices and cloud computing data centers. It is a medium weight and intermediate level of computing power

- Mist computing – It is lightweight and rudimentary form of computing power that resides directly within the network fabric at the extreme edge of the network fabric using microcomputers and micro-controllers to feed into Fog Computing nodes and potentially onward towards the Cloud Computing platforms

Today’s IoTs Infrastructure Requirements

IoT requires a new kind of cloud infrastructure to support large volume, different variety and velocity of data generated by sensors. Today’s cloud models are not designed to support such requirements. Billions of previously unconnected devices are generating more than two exabytes of data each day. An estimated 50 billion “things” will be connected to the Internet by 2020. Moving all data from these things to the cloud for analysis would require vast amounts of infrastructure.

These billions of new things also represent countless new types of things such as sensors , actuators, Camera, Industrial Robots etc. Some are machines that connect to a controller using industrial protocols, not IP. Before this information can be sent to the cloud for analysis or storage, it must be translated to IP.

IoT devices generate data constantly, and often analysis must be very rapid. For example, when the temperature in a chemical vat is fast approaching the acceptable limit, corrective action must be taken almost immediately. A patient in ICU and his heart beat sending start increasing need immediate attention or Vehicle to Vehicle communication. In all these case the time it taken for taking measurement and to travel from the edge to the cloud for analysis, the opportunity to act immediately might be lost. Handling the volume, variety, and velocity of IoT data requires a new computing architectures model and main requirements of such model is listed below:

- Minimize Latency: Milliseconds matter when you are trying to prevent manufacturing line shutdowns or restore electrical service or need to save patient life or to avoid collision of vehicle. Analyzing data close to the device that collected the data can make a big difference.

- Conserve Bandwidth: Offshore oilrigs generate 500 GB of data weekly. Commercial jets generate 10 TB for every 30 minutes of flight. It is not practical to transport vast amounts of data from thousands or hundreds of thousands of edge devices to the cloud. Nor is it necessary, because many critical analyses do not require cloud-scale processing and storage.

- Security Concerns: IoT data needs to be protected both in transit and at rest. This requires monitoring and automated response across the entire attack continuum: before, during, and after.

- Reliable Operation: IoT data is increasingly used for decisions affecting citizen safety and critical infrastructure. The integrity and availability of the infrastructure and data cannot be in question.

- Data collection over wide geographic area with different environmental conditions: IoT devices can be distributed over hundreds or more square miles. Devices deployed in harsh environments such as roadways, railways, utility field substations, and vehicles might need to be ruggedized. That is not the case for devices in controlled, indoor environments.

- Best Data Processing: Which place is best depends partly on how quickly a decision is needed. Extremely time-sensitive decisions should be made closer to the things producing and acting on the data. In contrast, big data analytics on historical data needs the computing and storage resources of the cloud.

Traditional cloud computing architectures do not meet all of these requirements. The approach moving all data from the network edge to the data center for processing adds latency. Traffic from thousands of devices soon outstrips bandwidth capacity. Industry regulations and privacy concerns prohibit offsite storage of certain types of data. In addition, cloud servers communicate only with IP, not the countless other protocols used by IoT devices. The ideal place to analyze most IoT data is near the devices that produce and act on that data.

What is Fog Computing?

Fog computing is the combination of hardware and software solutions that decentralizes the cloud, extends it to be closer to the things to monitors and analyzes real-time data from these things and then takes action.The fog reduces data-analysis time from minutes to seconds. Situations where milliseconds can have fatal consequences require high-speed data processing such as diagnosis and treatment of patients or vehicle-to-vehicle communication to prevent collisions and accidents.

These devices, called fog nodes, can be deployed anywhere with a network connection: on a factory floor, on top of a power pole, alongside a railway track, in a vehicle, or on an oil rig. Any device with computing, storage, and network connectivity can be a fog node. Examples include industrial controllers, switches, routers, embedded servers, and video surveillance cameras.

Analyzing IoT data close to where it is collected minimizes latency. It offloads gigabytes of network traffic from the core network. And it keeps sensitive data inside the network. In addition, having all endpoints connecting to and sending raw data to the cloud over the internet can have privacy, security and legal limitations, especially when dealing with sensitive/confidential data subject to regulations norms.

Features of Fog Computing

- Analyzes the most time-sensitive data at the network edge, close to where it is generated instead of sending vast amounts of IoT data to the cloud.

- Acts on IoT data in milliseconds, based on policy.

- Sends selected data to the cloud for historical analysis and longer-term storage.

How Fog Computing Works

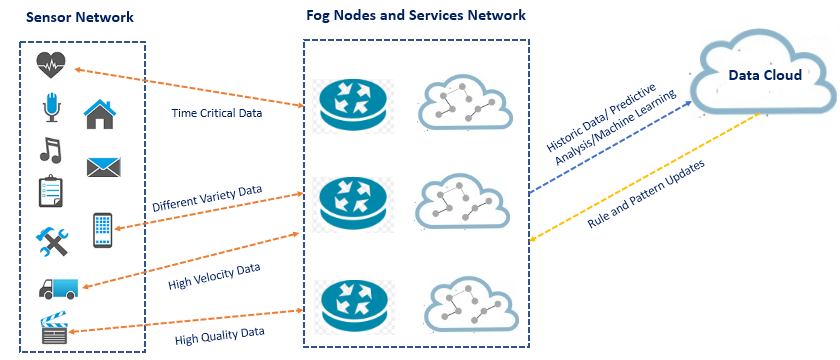

Developers either needs to port or write IoT applications for fog nodes at the network edge. The fog nodes closest to the network edge ingest the data from IoT devices. Then and this is crucial the fog IoT application directs different types of data to the optimal place for analysis as

- The most time-sensitive data is analyzed on the fog node closest to the things generating the data. In Smart Grid example, the most time-sensitive requirement is to verify that protection and control loops are operating properly. Therefore, the fog nodes closest to the grid sensors can look for signs of problems and then prevent them by sending control commands to actuators.

- Data that can wait seconds or minutes for action is passed along to an aggregation node for analysis and action. In the Smart Grid example, each substation might have its own aggregation node that reports the operational status of each downstream feeder and lateral.

- Data that is less time sensitive is sent to the cloud for historical analysis, big data analytics, and long-term storage . For example, each of thousands or hundreds of thousands of fog nodes might send periodic summaries of grid data to the cloud for historical analysis and storage.

IoT Applications

Fog computing can provide location services and service quality for real time applications and streaming. It also permits a bigger heterogeneity as it is connected to end-user devices and routers. The applications can include industrial automation, transportation and networks of sensors and some of this are listed below

- Smart Grid : The fog collectors process the data from energy load balancing applications, such as micro-grids, smart meters and issue commands to the actuators and generate real-time reports. Depending on the energy demand and availability, the devices automatically switch to alternative energies (wind and solar).

- Smart Traffic Lights (connected vehicles): Video cameras on the streets can automatically sense some cars, such as ambulances, and change the street lights to open lanes through the traffic or identify the presence of pedestrians and calculate the distance and speed of an approaching vehicle.

- Wireless Sensor and Actuator Networks: Actuators can exert physical actions, unlike traditional wireless sensors, and control the measurement process, stability and oscillatory behaviors by sending alerts.

- Decentralized Smart Building Control: Wireless control help measure temperature, humidity and gas levels in a building, and such information is exchanged among all sensors in order to form reliable measurements. Fog devices and sensors will react to the data and take decisions such as lowering the temperature or remove moisture from the air, creating smart buildings.

Standardization Body

The OpenFog Consortium which is a group of developers, manufacturers and software companies that share ideas on how to expand the use of the fog for the internet of things ecosystem.Fog computing supplements the cloud and will continue to expand as it improves efficiency and reduces the amount of data processed by the cloud. Its website is www.openfogconsortium.org

The consortium was founded by Cisco Systems, Intel, Microsoft, Princeton University, Dell, and ARM Holdings in 2015 and now has 57 members across the North America, Asia, and Europe, including big companies and noteworthy academic institutions.