The evolution toward 6G wireless telecommunication networks envisions hyper-intelligent, self-optimizing Radio Access Networks (RAN) capable of delivering extreme performance requirements such as ultra-low latency, high reliability and massive connectivity. The deployment of agentic artificial intelligence (AI) systems into the 6G telecommunication networks presents both significant opportunities and risks. While AI-driven automations can enable intelligent, adaptive Radio Access Networks (AI-RAN), it also introduces the risk of potentially unsafe, non-deterministic, or policy non-compliant agentic decisions.

Telecommunication networks are strategic and critical infrastructure tightly governed by national policies. These resilient networks provide always-on data pipelines that are the lifeblood of a nation’s economy. Therefore, it is a basic expectation from agentic AI systems integrated into such networks to have appropriate guardrails at all stages of the agentic workflow, lest the AI-driven RAN decisions result in service disruptions, security breaches, or regulatory violations. This paper explores the concept of guardrailing the agentic AI systems designed for applications in the 6G AI-RAN, outlining the challenges, architectural principles, safety mechanisms, and potential research directions.

AGENTIC AI SYSTEM COMPONENTS

Figure 1 depicts the components of an agentic AI system. The choice of model/s, tools, body of knowledge and guardrails are specific to the application domain. In the case of advanced wireless systems, usually: many small LLMs are trained for specific tasks; the tools include wireless network specific planning and analysis tools (in addition to general computing and data representation tools); knowledge comprises documentation on applicable industry standards, national telecom policies and regulation, and, operator’s own operating policies, etc. The safety guardrails are discussed in a subsequent section below.

CHALLENGES IN AGENTIC AI FOR RAN

Integrating agentic AI systems into live RAN operations poses unique challenges. Some of these are described below.

- Non-deterministic outputs leading to unsafe actions: AI systems don’t always behave the same way when given similar input conditions. This unpredictability can lead to unsafe actions, e.g., an AI agent might decide to boost power in one tower to improve throughput while causing unwanted signal interference with a neighbouring tower.

- Difficulty in rollback or containment after unsafe actions: Unlike humans, AI agents may not have a simple “undo” button. Once a bad action is taken, rolling it back takes time and effort. For example, an AI agent changes Tx Power and handover parameter settings on 50 cell towers at once in an attempt to improve cell edge peak throughput but, contrary to the goal, the changes worsen coverage due to new coverage gaps (Figure 2). In such a case, since the engineers cannot instantly revert everything back, for a few minutes, thousands of users may face dropped calls or handover failures or internet outage.

- Emergent (new) behaviors that destabilize performance (KPIs) or service slices: When AI agents interact with complex networks, they sometimes learn unexpected new behaviours. For instance, an agent in order to balance network load across available service slices (i.e., voice, video, gaming, IoT, etc.) allocates most resources to video users for smoother YouTube streaming (Figure 3). However, in doing so, voice users and IoT sensors (like smart meters or health monitors) get ignored. This breaks the operator’s service promises (SLAs) to voice and IoT customers.

- Conflicts with national regulations or operator’s own operational policies: AI doesn’t always understand rules and laws unless explicitly trained on them. For instance, an agent may increase Tx power levels beyond the legally permitted EIRP (Effective Isotropic Radiated Power) and therefore accidentally violate national spectrum regulations (Figure 4). This could lead to hefty penalties for the operator.

- Lack of transparency or explainability in AI-generated decisions: Agentic AI systems often behave like a “black box” — they sometimes give outputs that are hard to understand and why they made a particular decision (Figure 5). In telecom networks, where every action potentially affects revenue, service quality and compliance, not knowing the “why” can be risky.

Imagine that mobile internet suddenly slows down and engineers find that the AI has rerouted traffic between towers. But when they ask, “Why did you do this?”, the agentic system is unable to provide a clear explanation. This lack of explainability makes it difficult to debug problems quickly, build trust in the AI system, and assure the regulators that the actions are policy compliant.

GUARDRAILING PRINCIPLES & ARCHITECTURE

To ensure safe deployment of AI-driven RAN automations, adherence to the following guardrailing principles is essential.

- Least Authority: Agents only propose intents and the final actions pass through strict machine as well as human validation. This will prevent a single faulty inference from causing widespread service disruption.

- Deterministic Boundaries: All AI outputs must pass through strict schema and policy filters before being applied. This includes schema, unit, and policy checks on all outputs. Such measures ensures that the outputs remain within well-defined safe envelopes.

- Safety over Performance: System defaults to rollback/deny in uncertain cases to prevent instability and protects customer experience, even if it means sacrificing temporary performance gains

- Progressive Autonomy: Agentic actions are carried out in shadow mode (observation only), then progress to canary nodes (limited live testing), and finally to cluster-wide rollout once proven safe. This will minimize risk by ensuring new behaviours are solidly validated.

- Auditability: For transparency and explainability of all decisions, agentic AI system shall maintain detailed logs (of agent decisions, validation steps carried out, rollback events, etc.) and paired with explainability tools (e.g., why a parameter change was recommended). This will help in building operator confidence, simplify troubleshooting, and ensure regulatory compliance.

SAFETY ARCHITECTURE FOR A GUARDRAILED AI-RAN

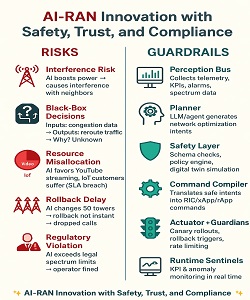

The proposed guar railing architecture for an AI-RAN comprises the following layers (Figure 6).

- Perception Bus: Collects telemetry, counters, KPIs, alarms, and spectrum data.

- Planner: LLM or agent generates intents (e.g., optimize BLER).

- Safety Layer: Schema validation, policy engine, and digital twin simulation.

- Command Compiler: Translates safe intents into RIC/xApp/rApp commands.

- Actuator with Guardians: Canary rollouts, rollback triggers, and rate limits.

- Runtime Sentinels: KPI and anomaly monitoring.

- Audit Logs: Immutable record of decisions and rationales.

Figure 6: AI-RAN Safety Layer in action: Illustrative example

TECHNIQUES FOR GUARDRAILING AI DECISIONS

Guardrailing requires checks before, during, and after the decisions are made by the agentic AI system.

Before the Decision

- Constrained optimization of safe operating envelopes. A drone delivery agent cannot plan routes beyond its network coverage.

- Action masking during decoding. In a live network, an agent cannot propose deactivating all cells/spectrum bands at once because such an action is masked.

- Sandboxing of tools with limited write privileges. An agent can only adjust parameters in a digital twin environment before impacting production traffic.

During Decision Making

- Schema validation and strict JSON outputs: In network slicing, validation ensures that only integers within the permitted bandwidth ranges are allocated.

- Policy enforcement with OPA/Rego rules. Agent cannot route traffic through international gateways that violate data sovereignty laws.

- Digital twin impact assessment. Network optimization agent runs its load balancing proposal on a 5G digital twin to ensure ultra-low latency slices remain within the operator SLA.

- Canary deployments with rollback options. A new AI-based handover algorithm is rolled out to just one cluster of base stations, and, if dropped call rate rises, the rollout is reversed.

After the Decision

- Monitoring of KPIs such as latency and slice fairness. An agent monitors slice fairness, and, if one tenant’s traffic dominates unfairly, a corrective action is flagged.

- Auto-rollback on threshold violations. For example, if latency spikes in an AI-optimized RAN slice with new scheduler parameters, the system reverts to the previous scheduler configuration.

- Counterfactual analysis to test decision robustness. A routing agent picks path A for data traffic but counterfactuals show path B could have reduced congestion further, feeding insights back into retraining.

POTENTIAL RESEARCH DIRECTIONS

To strengthen guardrailing AI in real-world agametic AI deployments, several areas need deeper research. Some prominent ones are listed below.

- Adaptive Guardrails: Guardrails that evolve dynamically – tightening or relaxing based on real-time conditions and past system performance.

- Explainability & Causality: Beyond interpretability dashboards, causal reasoning methods that let operators trace why the AI selected an action and what upstream factors influenced it.

- Cross-Domain Guardrails: Unified frameworks that can enforce safety consistently across domains such as finance, telecom, and healthcare without needing bespoke policies for each.

- Human-in-the-Loop Auditing: Interfaces that allow scalable oversight, where humans intervene only on high-risk or ambiguous cases without slowing down routine decisions.

- Formal Verification of AI Policies: Applying methods from formal methods and model checking to prove mathematically that certain unsafe actions can never be executed.

- Robustness to Adversarial Inputs: Ensuring guardrails cannot be bypassed by adversarial prompts, corrupted data, or malicious environment manipulation.

About the Author

Mohinder Pal (MP) Singh is a proven technology leader with over 30 years of R&D leadership experience in large Tier-1 Indian and multinational telecom/IT organizations, specializing in software and product engineering, R&D operations, system engineering, and project/program management. He has been recognized for building high-caliber telecom and software R&D organizations, leading successful research collaborations with the academia and delivering impactful R&D outcomes aligned with India’s national technological priorities.

His current interest is to lead indigenous, cost-effective and sovereign AI/ML-driven innovations for India’s strategic technological infrastructure, e.g. Communications, Defense, Power, Agritech, Homeland Security, etc.

You may reach him on LinkdIn https://www.linkedin.com/in/mpsingh1912/